Why Imitation Learning Fails When Robots Have Two Right Answers

Averaging expert demonstrations, is the very thing that prevents machines from making confident, successful choices.

Who would have thought that a million-dollar problem of robot indecision will be solved by a technique designed to generate digital art.

In our robotics lab, the phrase “Diffusion Policy” has become the latest buzzword, quickly shifting from a niche research idea to a core training method. The sheer speed of its adoption—and the dramatic boost in robot dexterity it enables—is what drove me to dig deeper. After all, complex robots designed for manufacturing and dexterous manipulation shouldn’t need help from a technique invented to generate beautiful, imaginary artwork. Yet, the solution to the robot’s biggest dilemma came from the world of generative AI and image denoising.

If you’ve heard of Stable Diffusion or DALL-E, you know the power of diffusion models to create stunning images from a text prompt. The startling realization across the robotics community was this: the deep learning architecture that taught AI to dream up a picture is the very thing that taught a robot to decide what to do. It provided the missing piece for one of the most frustrating problems in machine learning: the inability of robots to handle simple choices.

What Exactly Is a Robot “Policy”?

To understand the breakthrough, we must first define the problem. In robotics, a policy is the brain’s rulebook. It is the core algorithm that dictates the robot’s action based on what it sees. Think of it as a function:

If the observation is an image of a red block, the policy tells the robot to move its gripper to coordinates (x, y, z). Training a policy is simply teaching the robot the most successful mapping from what it sees to what it should do.

The most common training method is Imitation Learning (IL), specifically Behavioral Cloning (BC). This is essentially supervised learning: demonstrate to the robot a successful task, and make it mimic every step.

The Decisive Flaw in Traditional Training

For years, the Achilles’ heel of imitation learning has been the multimodal action dilemma. This problem arises because many real-world tasks have more than one equally correct way to succeed. When the robot sees a situation, there are multiple valid moves it can make, and it needs to choose one.

Imagine trying to teach a robotic arm to clear a clutter of objects on a table. Two human experts provide demonstrations:

Expert A always clears the space by pushing the objects to the left.

Expert B always clears the space by pushing the objects to the right.

Both are successful. The traditional policy, which relies on Behavioral Cloning (BC), attempts to solve this problem by averaging the actions. It treats the problem like a simple regression, aiming to find the single output that minimizes the difference between all successful actions. In our example, the policy looks at the “Push Left” trajectory and the “Push Right” trajectory, and then calculates their midpoint. The result is a blurry, non-committal motion: the robot moves its arm straight down the middle. This average action is too weak and wobbly to move the clutter successfully, meaning the policy leads to failure every time the task allows for a choice. The robot creates a statistical Frankenstein of an action that corresponds to no successful path at all.

Modeling Possibilities Instead of Averages

The key insight that led to Diffusion Policy was the realization that image generation models were built to handle distributions of data—in this case, action distributions—with exceptional fidelity.

Diffusion models, popularized by stunning image generators like Stable Diffusion, do not predict a single, average output. Instead, they are trained to model the entire space of valid data.

The Analogy: Sharpening the “Noisy” Intent

The Starting Point is Chaos (Pure Noise): When the Diffusion Policy is asked to produce an action, it starts not with a partially decided action, but with pure, random noise. Imagine this as a TV screen of static, where every possible action is scrambled together.

Iterative Denoising (Committing to a Mode): The policy then begins a conditional denoising process—iteratively removing the noise while conditioning on the robot’s visual observation and the goal. The crucial difference is that the network has learned the shape of the action distribution, which includes the distinct “Push Left” mode and the “Push Right” mode.

As the noise is removed, the policy acts like a smart filter. It is forced to snap the chaotic input toward one of the learned, high-probability areas. It will confidently refine the noise until a clear, sharp, successful action is revealed: ”Execute Push Left.”

This technique is responsible for teaching robots to perform complex tasks, from flipping a mug and pouring sauce onto a pizza to high-precision assembly. Because the model is designed to recover a clear, high-quality sample from a noisy input, it avoids the blurry middle ground entirely. This ability to commit to a clear, successful intention is why Diffusion Policy has caused such a revolution, transforming hesitant, indecisive machines into dexterous, confident problem-solvers.

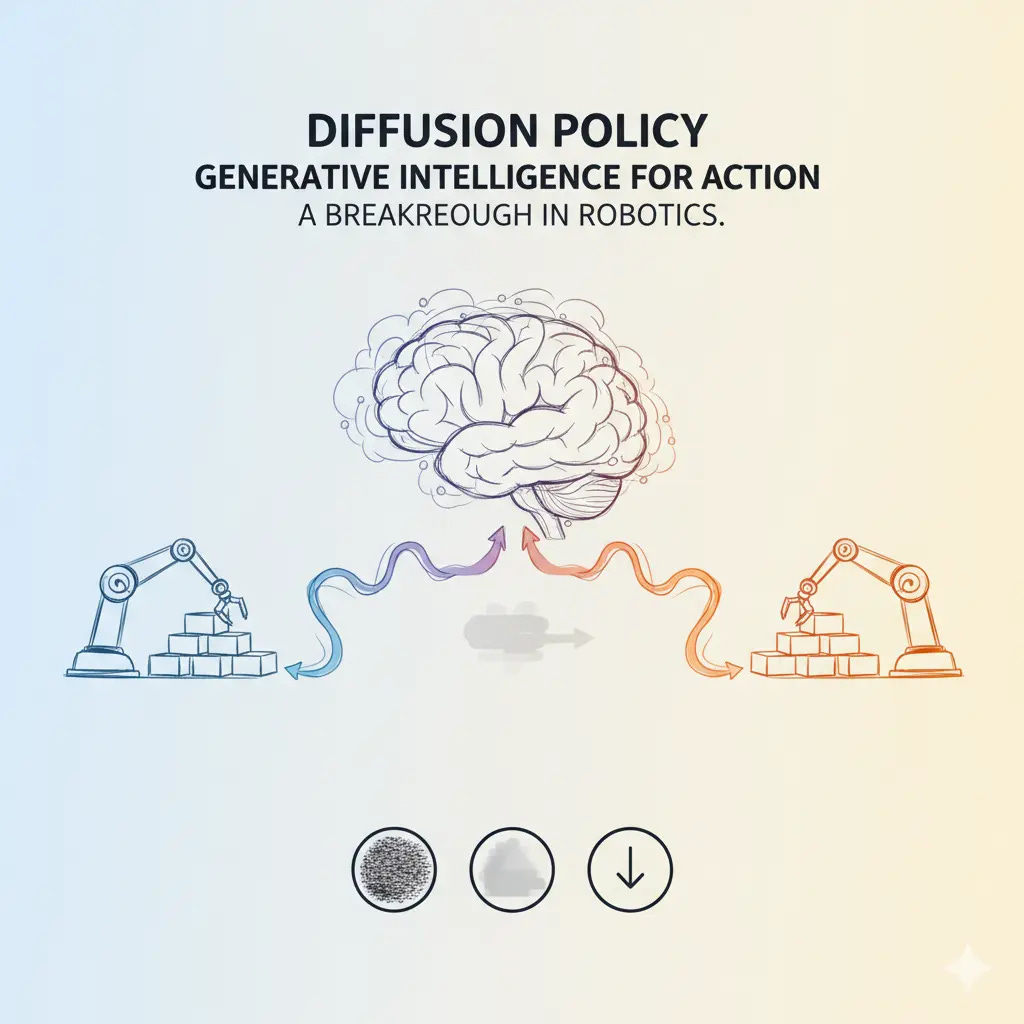

The Next Frontier: Generative Intelligence for Action

Diffusion Policy’s true power is that it unlocked generative intelligence for action. Just as a generative AI artist can take the prompt, “Draw a dog wearing a hat,” and produce infinite variations of valid images, Diffusion Policy takes the prompt, “Clear the table,” and can generate infinite variations of valid, successful trajectories.

This is a profound shift: the robot is no longer a simple mimic; it is a creative planner, able to choose the best style of action for the moment. The success of this cross-domain leap—where the solution to a mechanical problem was found in a digital art tool—begs an intriguing question: What other hidden solutions are waiting in the unexpected corners of AI research? Will the secret to safer human-robot collaboration come from the language models that power chat bots? Or will the path to true lifelong robot learning be hidden within the algorithms that predict weather patterns? The only thing certain is that the next leap will likely come from the place we least expect.

You’re fascinated by robots, but the field feels like a wall of complex math and obscure jargon, with every article assuming you already have a PhD.

Build-Robotz takes that wall and turns it into building blocks. Twice a week, I explore one inspiring concept, making the complex feel intuitive.

It’s time to go from overwhelmed to empowered. Start building your understanding, one brilliant idea at a time.

P.S. Intrigued by the future of automation and looking to start a career in robotics? Just reply to this email, and I’d be happy to help you get started.